In this post I discuss some of the potential usability issues when working with static site generation.

This is the second post in a series describing my home grown CI-CD. In Post-I I described my first home-grown CI-CD architecture.

Motivation for going static

My CI-CD system was built to house one website in particular, a volunteer site with requirements that made it difficult to host in a typical content management system (CMS) like Wordpress or Joomla. The site in question ( CNTHA 🔗 ) contains historical information about Canada’s navy technology. None the material is considered sensitive, but if you take the topic of navy information and combine it with PHP signatures that bots will pick up, you apparently make the site very attractive target to attackers.

| Period | Site Archetype | Deployment | Notes |

|---|---|---|---|

| 2000-2004 | Raw HTML | Residential Internet with Dynamic DNS | No hacking, but the dependency on one contributor made it slow to change. |

| 2004-2008 | PHP CMS | Residential Internet with Static IP | Multiple contributors, but frequent comment spam. |

| 2008-2012 | PHP CMS | Commercial Web Host | Again defaced by comment spam, escalating to backdoors. |

| 2012-2015 | PHP CMS | Virtual Private Server | First time I’m hosting. VPN protected. Safe for years until December 2015, when a backdoor is detected! |

| Early 2016 | — | — | CI-CD tool built. Static site overhaul conducted. |

| 2016-present | Static Site Generation | Dedicated Server Rental | Smooth seas on security front. 100% Secure and fast, but frustrating to edit for non-programmers. |

The website had gone through several hosts before it came to me in 2012. I locked down access to the PHP-managed front end behind a VPN, and wrote scripts that ran every six hours, checking for backdoors. In December 2015 a backdoor was detected, in the form of a fake image 🔗 . The persistence of hacker attention led me to seek more a secure approach to content management.

Lessons Learned

While the effect of bad guys has been eliminated since the 2016 static rewrite, the change has been hard on the good guys too. Eliminating the PHP CMS had made the site much harder for contributors to edit. One of the hopes for the site was for it to grow into a community. With a lack of technical staff (besides me, and I was employed full-time elsewhere 🔗 ), the site needed to run itself, external contributions and all.

The contributors are all engineers, but they were accustomed to building ships not websites, and so they had some difficulty with software development approach I had taken. Having studied a little about Human Computer Interaction (HCI), I really should have done the following:

- understand the actual activity most contributors are engaged in,

- and mind the conceptual gaps imposed by the tools.

Understanding the activity

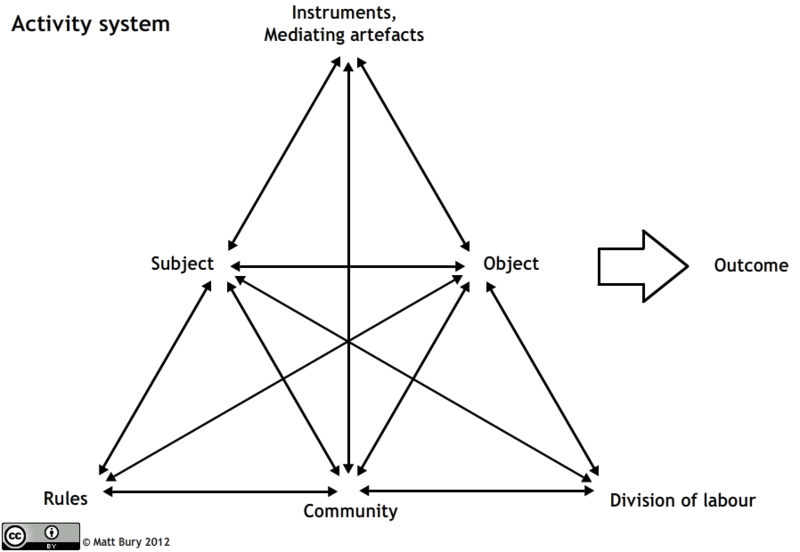

One of the analytical frameworks used in HCI is Activity Theory 🔗 , the application of which can help reveal the challenges faced by actors engaged in a collaborative activity. In the middle row of the diagram below we have individuals (subjects) acting on objects to achieve a desired outcome. Mediating the the activity are instruments and artefacts (top of the triangle) as well as the rules, community, and division of labour (bottom of the triangle).

When I built my CI-CD system, my goal was to maintain a moderately complex static website using development tools I understood. For source documents I chose to use markdown 🔗 , and I built portions of the site using a static site generator called Metalsmith 🔗 (more on this in future posts). Additional parts used PDF manipulation tools 🔗 available in Linux, as well as some Bash scripts to knit it all together.

In my mind, the activity was “take source documents and data from multiple contributors, transform them into a website, and deploy the result”. I was thinking only of the top of the Activity Theory triangle, that is I accepted the constraints imposed by the tools I had chosen, and I dove into the Subject => Object => Outcome axis. Having chosen (or built) the instruments myself, I felt quite comfortable they were a reasonable solution to the activity as I understood it.

What I failed to appreciate was how other users would perceive the activity. The moment I shared these tools with contributors, they embarked on a related but different activity than I had originally conceived. For them, the activity was “edit the website”, and they came to this activity remembering subtly different editing tasks (e.g. edit an MS Word document) that offered very different instruments and imposed very different rules. While they were willing to live with new tools, and they accepted the new constraints that the process imposed, as non-programmers they found these choices arbitrary and counter-productive. It seems I have dragged non-coders a little too far into a coder environment.

Minding the gap

As a software developer I am accustomed to a significant distance between my inputs to a compilation process (i.e. computer code) and the executable artifact generated by that code (i.e. the runnable program). This kind of gap or gulf of execution and evaluation 🔗 is well understood by HCI researchers, having been described by Norman in 1986.

Once solution to reducing this gap is a design goal called direct manipulation 🔗 , first articulated by Ben Shneiderman in the early 1980s. Shneiderman’s idea was that users should feel as though they are directly controlling objects represented by the computer.

For document editing, the goal of direct manipulation was approximated with WYSIWYG editing tools, short for “what you see is what you get”. Few coders expect their programming tools to provide this level of immediacy (for inspiration, Bret Victor 🔗 is one exception who proves the rule).

In my haste to outrun the ravening horde attacking the PHP site, I had failed to mind the gap. In fact I had left a conceptual canyon in place between a contributor’s inputs and the system’s output on a website.

Proposed Solutions

My new challenge is to pull back into the WYSIWYG world, and then try to imagine tools that would get us somewhere in the middle. I realize others have done this before, but I maintain it’s worth the effort.

My goal in future is to strive for a tighter editing loop for contributors to the site, while helping them understand the need for separation of concerns, enabling features like responsive design and i18n.

Challenges Remaining

Developer/Deployment Parity

It’s easy to provide nearly immediate builds and therefore a much tighter feedback loop on a UNIX-based host (e.g. MacOS or Linux), but setting up the equivalent on Windows takes some additional steps using Windows Subsystem for Linux (WSL). My next platform must take ease of tool installation into account.

Collaboration vs Security

Any sufficiently interactive site requires dynamic web page rendering of some type. Since dynamic rendering is a vector for attack, we must seek a fine balance. This site demonstrates that balance, with a static front end and protected areas (see privacy policy). This work will be described in future updates.

Authenticity vs Accessibility

The CNTHA site that inspired this work is all about history, but some of the document formats could use some sprucing up. We need to decide what’s more important: the authenticity of the original format, or the ability to find, access, and interpret the content.

WYSIWYG vs Responsive Design

One important design consideration in choosing technologies for web page editing will be the trade-off between WYSIWYG and the adaptive layouts needed for the multitude of devices people use to browse the web.

Banner photo by Alex Radelich on Unsplash , effects by yours truly